In 2019, after a combination of organic growth and two major acquisitions, Clarient and Genoptix, NeoGenomics decided to throw its proverbial hat into the data ring. It had long had data aspirations but by 2019 it had become one of, if not the, leading multi-modality oncology testing labs in the country, with approximately 20% market share.

Like many other “big” labs, NeoGenomics had been selling its de-identified data to data aggregators for years. Companies like HealthVerity, IQVIA and Symphony Health had purchased enormous data sets from diagnostic labs and EHR vendors for pennies on the pound, which they turned around and sold to Pharma and CROs.

By 2019, NeoGenomics realized that they were processing critical lab testing on over 500,000 cancer patients a year. They had a strong desire to move up the data value chain so they decided to create a new Informatics Division.

Although there was a general desire to capture additional value from the data, there was not a clear coherent strategy on how to go about that - other than bypassing data aggregators and selling data direct to Pharma.

The first thing our team did was develop a long-term platform strategy to unify the underlying technology around key stakeholders, including providers, payers, patients and Pharma. We incorporated a micro services strategy to leverage key components around each “solution” - which was generally more of a front-end UI/UX experience layer.

While some of the solutions involved major undertakings, including deconstructing loosely defined underlying data models, we placed significant effort to achieve short-term wins.

The first difficult decision we made was to pivot away from a data lake built on top of a proprietary database vendor. That decision had been made 9 months before our arrival, was months behind schedule, and millions of dollars invested. Instead, we moved everything to Snowflake. The entire journey from vendor selection to live deployment took approximately 6 months and cost a projected couple hundred thousand per year during its first few years of operation, which saved the organization millions of dollars not to mention vastly improved capabilties.

A key challenge that our data sales team had involved painstaking pre-sales data queries, or “counts.” Typically, when a pharmaceutical company is looking to understand how to improve adoption of new FDA approved immunotherapies, they will evaluate testing behavior of treating physicians.

If, for example, a new drug requires PDL1 and KRAS biomarker testing for a specific cancer with a specific progression, they look to see if the correct testing was ordered. If not, they can assume the provider is unaware of the therapy and its potential benefit to patients. From there, they determine the number of providers in each zip code so that they can formulate an e-detailing campaign.

While the commercialization strategies and techniques seem incredibly inefficient, the data “product” from the perspective of the lab seems almost trivial, and in most cases, should be. However, NeoGenomics, like most labs never designed their underlying systems to support bespoke, on-demand reporting. As a result, a very few number of people in the organization had the understanding, skillset and wherewithal to run SQL queries against the data mart. Sounds pretty simple, right? Again, should be. If the data model were well structured, documented, and understood.

Instead, what happened in real life is that a potential Pharma client would send a list of inclusion and exclusion criteria. On the surface, most of the criteria seemed pretty basic - age range or cutoff, gender, diagnosis, cancer type, stage, target biomarker(s) tested.

The first challenge, which I’m not going to really go too much into here, is that key demographic data is frequently unavailable. There’s a reason for it, which could easily lead to another case study on “optimizing data capture at time of order,” but let’s save that for another day.

Let’s assume that we more or less have all of the data in some shape or form in our data mart. The real challenge is that it is almost certainly not maintained in the correct “shape or form” that our client desires. Instead, someone needs to perform some type of translation on much of the data. A simple query that should take someone 10 minutes, typically took a week or longer because of the lengthy back and forth query definition phase.

Can you imagine what this does to your sales cycle and your conversion rate?

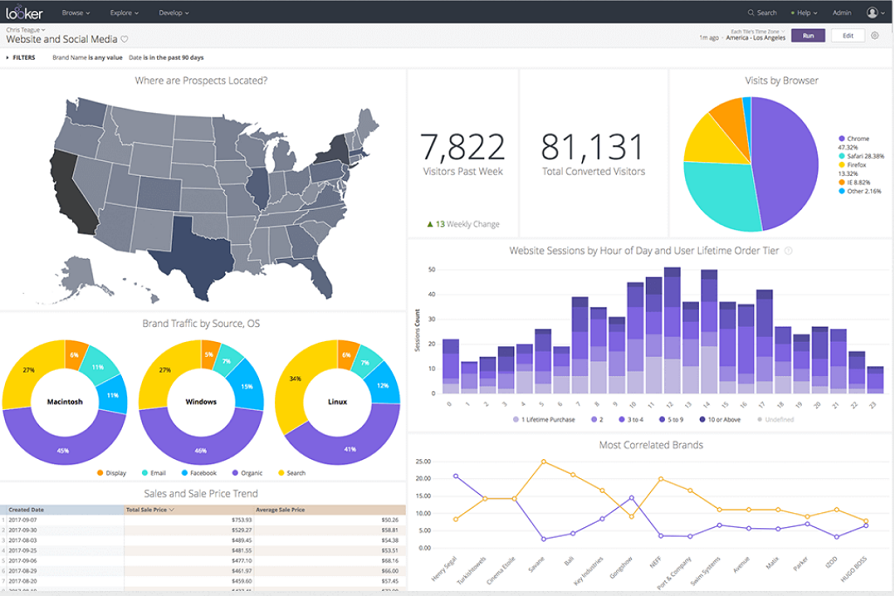

Instead, we resolved many of the translation issues when setting up our data lake in Snowflake. From there, we developed a web-based “Cohort Builder” application that enabled initially internal users to run on-demand custom queries. The application combined with the data lake, turned anyone in to a power user. The long-term goal was to expose the query application to external users so that they could run self-serve queries on demand, identify the target data set and generate a report in minutes instead of weeks, completely removing the need for developers or sales support.